My last time on an organized baseball team was nearly a decade ago – in the Fall of 2015 at Cornerstone University. I miss it a lot and will be a fan of the game for life. In the short years since I played, so much about the ways teams think about player development has changed, primarily due to advances in high-speed data-collection technology and computer vision. The sort of stuff they wrote about in The MVP Machine.

I remember in my later years of high school showing up to the batting cages and watching my hitting coach tinker with this little device mounted on the knob of his bat, which could track your bat path in 3d (I don’t remeber what it was called, but it looked like this). On a nearby iphone, you could watch it visualize the path of the bat in realtime (like you’ll often now see in golf broadcasts). It blew my mind!

Though this was my first introduction to modern baseball tech, it wasn’t until I was interning with the Orioles in 2019-2020 that I really gained an interest in how this new tech was changing player development. I wasn’t working on projects with high-frequency data for the team, but I was following baseball news, and generally enthralled with the pitch design content coming out of places like Driveline Research, and the way guys like Charlie Morton were having their careers resurrected by coaching staffs that embraced these new approaches.

Even with this interest and my technical bend, “cameras and computer vision” was pretty much the extent of my understanding of how this technology worked. By coincidence, however, at iRobot I began working closely with spatial data, which required me to dust off my long-stale geometry skills and re-learn how to apply them to a new domain. It was fascinating! And though I was primarily working with 2-dimensional data, it clarified my understanding of spatial geometry and brought me a lot closer to understanding the computation that can turn high-speed video into body markers, and body markers into the joint angles that are driving the use of biomechanics in player development.

So, when I learned about Driveline’s OpenBiomechanics project, which is an open-source repository of motion-capture data, paired with clean, processed joint angles, I was certainly curious. In the rest of this post, I’m going to summarize my attempt at reproducing Driveline’s joint angles, by writing my own code to process the motion-capture data.

Turning Motion Capture into Joint Angles

In the context of biomechanics, “motion-capture data” refers to a 4-dimensional dataset containing the 3-dimensional position of key points on the body across time (the 4th dimension). Since each point is mapped to a body part, they make up a anatomical representation of the body at each point in time.

From a player development perspective, what’s most valuable about this biomechanics data is that it gives us the ability to quantify, say, the position of the rear elbow, relative to the rear shoulder, when the hitter’s front foot hits the ground. These sort of relative body position computations are called joint angles.

The field of biomechanics has been studying body movement like this for many years, of course. What’s new is the ability to generate accuract biomechanics data using markerless motion capture (powered by computer vision), and its proliferation in player development.

Joint angles are an intuitive concept, but their calculation relies on 3d geometry that was not all that familiar to me. I’m thankful for Félix Chénier’s kinectics toolkit, which provides utilities to help make those joint angle calculations, and features some of the most clear and accessible documentation you’ll find on the internet of “3D rigid body geometry”.

I spent so much time in the docs, I even found and fixed a minor bug.

Calculating the rotation angles for each joint requires first establishing the coordinate system that represents the orientation of the body parts that the joint is composed of. For the right (rear) shoulder of a right-handed hitter, for example, we can compute the joint angles by looking at the relative difference in position between the right scapula, and the right humerus. Here’s a view from behind the hitter showing how that works.

Comparing my Calculations to Driveline’s

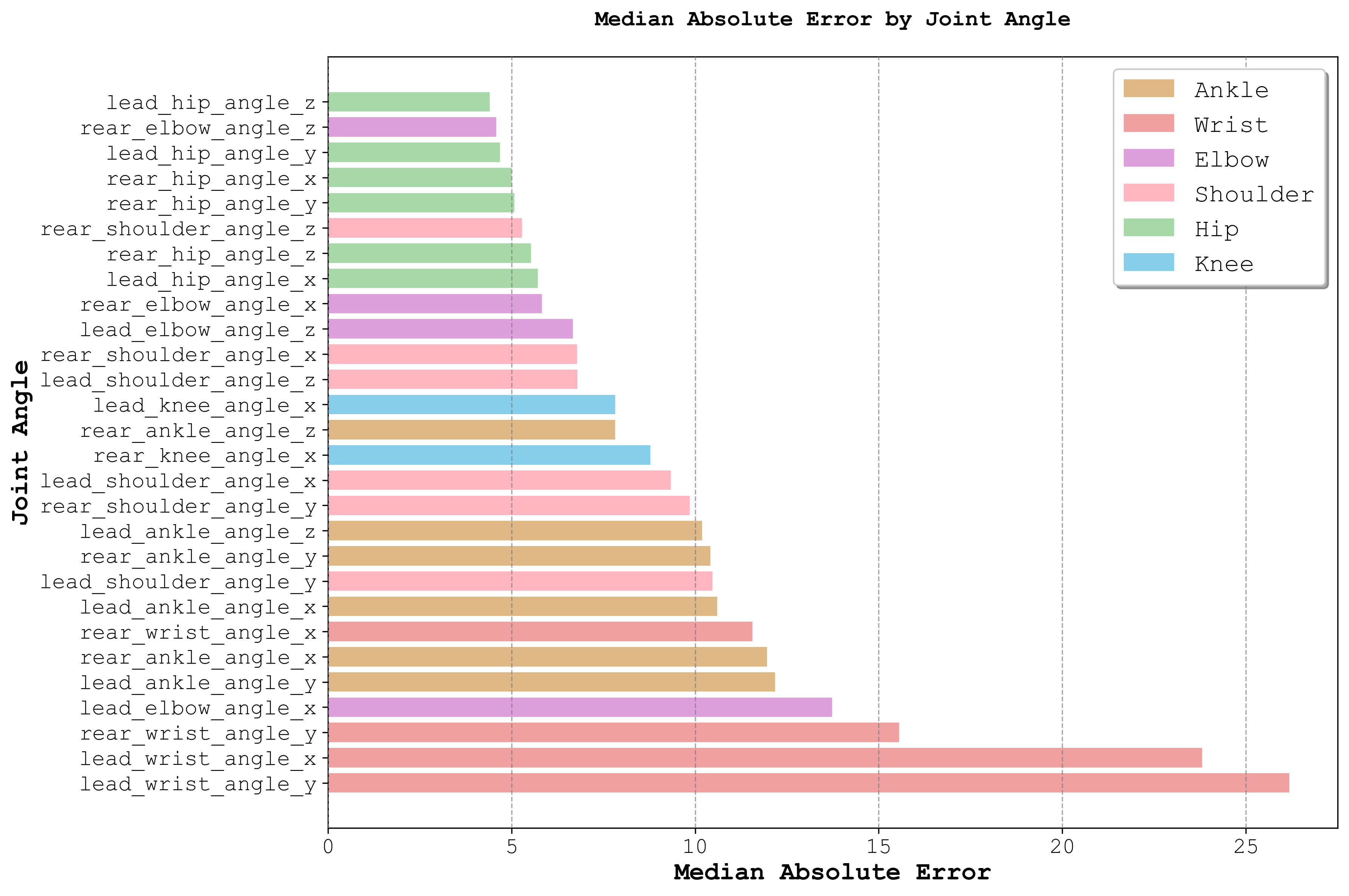

The visualization above displays the 3 joint angles associated with rear shoulder, but the full OpenBiomechanics project contains 45 joint angles for each swing. This repo, contains all the code I’ve developed in my attempt at reproducing Driveline’s official joint angles. So far I’ve worked through 28 of the 45 angles.

Throughout development, I continuously verified my implementation by validating that the joint coordinate systems were anatomically correct and that the resulting joint angles were in the expected ranges. Beyond those spot checks, however, I found it most helpful to compare my joint angles to those in the OBM repo, using ML metrics.

The median absolute errors of my estimtates were calculated across every frame of the 656 applicable swings in the repository. Though some joint estimates were more accurate than others, on balance I was pleased with the results, acknowledging that subtle differences in preprocessing steps can lead to deltas of a few degrees. On balance, my hip and shoulder coordinate systems seemed to be most closely aligned to Driveline’s. From the markers available in the dataset, I struggled to construct a viable wrist coordinate system, and it showed in the metrics — plainly my orientation is not quite right.

At any rate, here is the swing that my code performed best on.

And, here is the swing that my code’s estimates differed most from Driveline’s on.

Check out the code!